Latest Facts

Games and Toys

Technology

Games and Toys

Technology

Biology

Society & Social Sciences

Historical Events

Health Science

Games and Toys

Physical Sciences

Culture & The Arts

Games and Toys

Entertainment

Sports

Technology

Sports

Sports

Entertainment

Public Health

Sports

Sports

Entertainment

Sports

People

Sports

Games and Toys

General

Society

Sports

Games and Toys

Sports

Sports

US States

Celebrity

Electronics

Celebrity

Popular Facts

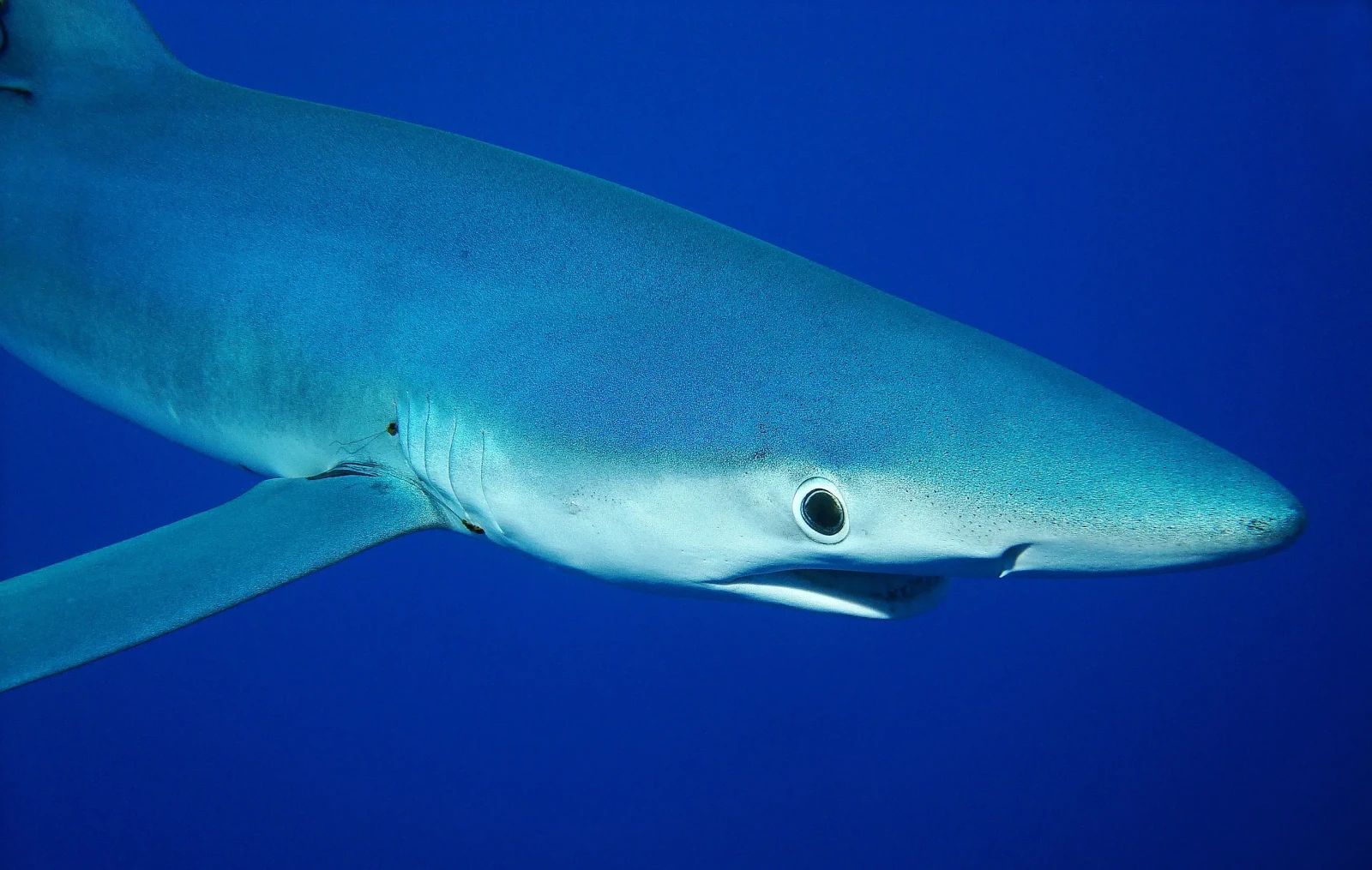

30 Incredible Marine Animals to See

Have you ever wondered what incredible creatures lurk beneath the ocean’s surface? From the tiniest plankton to the largest whales, the marine world is teeming with fascinating life forms. Imagine […]